pod无法删除

添加参数 --grace-period=0 --force

kubectl delete pod logging-fluentd-fluentd-v1-0-xtqdv -n kube-system --grace-period=0 --force

无法删除状态为Terminating的namespace

- edit namespace 删除finalizers内容

- 再次进行强制删除

pod无法删除-device or resource busy

使用上一方式可以将pod删除,但是需要查询不能删除的原因

查看kubelet日志

通过systemctl status kubelet查看日志,发现以下内容

Mar 05 19:59:13 VM_3_18_centos kubelet[554]: E0305 19:59:13.045986 554 nestedpendingoperations.go:262] Operation for "\"kubernetes.io/secret/f81a47de-52bc-11ea-84c5-123e2521a24c-default-token-p97zd\" (\"f81a47de-52bc-11ea-84c5-123e2521a24c\")" failed. No retries permitted until 2020-03-05 20:01:15.045962043 +0800 CST (durationBeforeRetry 2m2s). Error: UnmountVolume.TearDown failed for volume "default-token-p97zd" (UniqueName: "kubernetes.io/secret/f81a47de-52bc-11ea-84c5-123e2521a24c-default-token-p97zd") pod "f81a47de-52bc-11ea-84c5-123e2521a24c" (UID: "f81a47de-52bc-11ea-84c5-123e2521a24c") : remove /var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd: device or resource busy

日志内容显示 删除文件夹/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd时,该资源处理忙碌状态,被其它程序占用

查看占用的程度

#!/bin/bash

declare -A map

for i in `find /proc/*/mounts -exec grep $1 {} + 2>/dev/null | awk '{print $1"#"$2}'`

do

pid=`echo $i | awk -F "[/]" '{print $3}'`

point=`echo $i | awk -F "[#]" '{print $2}'`

mnt=`ls -l /proc/$pid/ns/mnt |awk '{print $11}'`

map["$mnt"]="exist"

cmd=`cat /proc/$pid/cmdline`

echo -e "$pid\t$mnt\t$cmd\t$point"

done

for i in `ps aux|grep docker-containerd-shim |grep -v "grep" |awk '{print $2}'`

do

mnt=`ls -l /proc/$i/ns/mnt 2>/dev/null | awk '{print $11}'`

if [[ "${map[$mnt]}" == "exist" ]];then

echo $mnt

fi

done

执行上述脚本

sh leak.sh /var/lib/kubelet/pods/81791176-a505-11e7-accf-5254fe5a9007/volumes/kubernetes.io~secret/default-token-pzyxh

发现占用该目录的进程

6466 mnt:[4026532254] /bin/sh-c/usr/output/bin/log-collector /rootfs/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

6482 mnt:[4026532254] /usr/output/bin/log-collector /rootfs/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

6495 mnt:[4026532254] /usr/local/bin/ruby/usr/local/bin/fluentd-c/tmp/fluentd/fluent.conf /rootfs/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

6502 mnt:[4026532254] /usr/local/bin/ruby-Eascii-8bit:ascii-8bit/usr/local/bin/fluentd-c/tmp/fluentd/fluent.conf--under-supervisor /rootfs/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

通过以下命令发现占用目录的是容器15f673a9022d9f1eed1d62c3560f191172f2226424a7cb5a48c426c3d722202d;其它进程的父进程同样为该容器

[root@VM_3_18_centos temp]# ps -ef|grep 6466

root 6466 6449 0 Feb27 ? 00:00:00 /bin/sh -c /usr/output/bin/log-collector

root 6482 6466 0 Feb27 ? 00:08:00 /usr/output/bin/log-collector

root 11708 9812 0 20:33 pts/3 00:00:00 grep --color=auto 6466

[root@VM_3_18_centos temp]# ps -ef|grep 6449

root 6449 740 0 Feb27 ? 00:04:41 docker-containerd-shim 15f673a9022d9f1eed1d62c3560f191172f2226424a7cb5a48c426c3d722202d /var/run/docker/libcontainerd/15f673a9022d9f1eed1d62c3560f191172f2226424a7cb5a48c426c3d722202d docker-runc

root 6466 6449 0 Feb27 ? 00:00:00 /bin/sh -c /usr/output/bin/log-collector

root 11740 9812 0 20:33 pts/3 00:00:00 grep --color=auto 6449

进入该容器查看

[root@VM_3_18_centos temp]# docker exec -it 15f673a9022d bash

root@VM_3_18_centos:/# df -lh

Filesystem Size Used Avail Use% Mounted on

overlay 50G 32G 16G 68% /

tmpfs 7.8G 0 7.8G 0% /dev

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/vda1 50G 32G 16G 68% /rootfs

devtmpfs 7.8G 0 7.8G 0% /rootfs/dev

tmpfs 7.8G 36K 7.8G 1% /rootfs/dev/shm

tmpfs 7.8G 0 7.8G 0% /rootfs/sys/fs/cgroup

tmpfs 7.8G 1.4M 7.8G 1% /rootfs/run

tmpfs 1.6G 0 1.6G 0% /rootfs/run/user/0

/dev/mapper/vg1-lv_data1 99G 38G 56G 41% /rootfs/data1

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/159a6570b650c2b9948bcdd73fbb64d07ac01e9e645e8efac8ad0ac18aefe108/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/d5d9d7487156af6e2eb2393dc8de03fa09f6f83f5d54537ffa7cfe8d8415501d/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/0292cf46d39e54c1c4cff865f6b1139d347a5cc7a5e2d7d6b36d72a54884a6b0/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/9a16055cf90aa62bb52887a8595683d103a24b0c645fa5a52db6e998a92d5ec3/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/81a89f4574ae00e910c33da9836005581c82ad4503eb30a34c2c6772bc7af7c0/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/d23203b9a9246430c9476c3e926c7a02edfaef6a738104f65800e182d1d88e69/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/0984e91b72ebbb53777c20a9cfcea99d6005d75fd06fd96acd342784e0f0d91a/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/cc154b326ce556dfc79296dcc67306cb98d7b813eff48ac0804281619b5a562e/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/af72ff5d556b55660745a52d5da4bcfd8d3e31bb3a930f1262404ee51d64a640/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/1e34251601d87a7aa941ecce91684f1d488019c59cbe95bc71d08772498afd31/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/8955b5feb402eeb395a92c8b5a2d5d8bb98bc22d4072a7d6db517ba01960708c/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/82c9393db7a858a7f24dfc13ad66d89004d0a28325114e30d3c5c051f1b5e30f/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/5abfcde0b01e202d0e28d954fd8ac95ad5794ba2eca0688146a51a3217bc5bfc/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/bd376fdbf0694a31d0e1d21caefd2c4baa75dd752990963eab85f06936b1ef8d/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/e38e2cd0ee11554d24b32d52c46438247e22c4f279ff17b0448607a80de8ebf6/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/3b9ecdaa7b34e5eb9f7334730998801c9ef5ad23fa43e9eea279e3354bd6a5bc/merged

overlay 50G 32G 16G 68% /rootfs/var/lib/docker/overlay2/ba5f235ed861d2b4c172cc9ef4752e1c09700498ec7be64ffb290f8739781241/merged

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/8606fcaf-4953-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/b4b13ba5215d8156105ace44bb815d302e6d05c321acc257bc3f43dab1e9b463/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/2fdd171c-4c9d-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/0e77a909caa44c1897dfa1759313000ea475be1c82e1596bea7c3677c372a63f/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/0363a3e7-5217-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/83418652ccddb66b1085854331be045882cef8b3d4f0b302f6cd989417c49139/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/f81a47de-52bc-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/31f745a14c38e220639d90518212ea2f84b7da26bd1b0a342c540656f317552e/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/484cc6b1-52bd-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/65ac76254706192ead75c03bfe8c8c27546f2b287a6d1c1b6ed8417ad9bce74c/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/fbca1cd8-5393-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/b463a18ff301ab25d5d3658fd8ccf85dc7473e3bf57dc35d80bf158d44c85e7c/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/ec42542a-56d5-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/550d6c5f2003e25328fc4eddfc5e6f4074434ed8a127917989a1510d92d4b30d/shm

tmpfs 7.8G 12K 7.8G 1% /rootfs/var/lib/kubelet/pods/85bbf9cf-57a2-11ea-84c5-123e2521a24c/volumes/kubernetes.io~secret/default-token-p97zd

shm 64M 0 64M 0% /var/lib/docker/containers/8af73a7c32284a3b87f259735986df1882fbe73b789f157f255f4fd4a0122557/shm

tmpfs 7.8G 12K 7.8G 1% /run/secrets/kubernetes.io/serviceaccount

shm 64M 0 64M 0% /dev/shm

故问题是该容器占用了该目录,导致delete pod 失败

pod使用hostnetwork导致pod无法访问系统的169.169.0.1的kubernetes service

使用hostnetwork来部署一个pod

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ingress-ds

labels:

app: nginx-ingress-ctrl

spec:

template:

metadata:

labels:

app: nginx-ingress-ctrl

spec:

serviceAccountName: nginx-ingress

hostNetwork: true #<<<<<<<<<<使用hostnetwork

containers:

- image: nginxdemos/nginx-ingress:1.0.0

name: nginx-ingress-lb

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

args:

- -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret

上述配置文件使用指定hostNetwork: true。发现,pod会用linux系统自身的路由表查找到目的地169.169.0.1:443, linux系统本身没有到169.169.0.1的路由,只能走缺省路由,导致无法连接到kubernetes service,最终导致pod失败。

当pod是非host模式时候,pod发起到kubernetes service 的访问(dst ip/port 169.169.0.1:443)会经过iptables最终做DNAT转到API server的IP:6443上。

所以如果一个pod需要去访问k8s内置的kubernetes service(169.169.0.1)的时候,可能这个pod不能使用hostnetwork模式

deployment与pod状态不一致

- 更新deployment 未更新 rs及pod

- 创建deployment 未创建rs及pod

- 无法删除deployment

查看kube-controller-manager是否有问题,重启后是否正常

不同namespaces间调用

servicename.namespace

如何查看k8s存储于etcd中的数据

操作etcd有命令行工具etcdctl,有两个api版本互不兼容的,系统默认的v2版本,kubernetes集群使用的是v3版本,v2版本下是看不到v3版本的数据的,我也是找了些资料才了解这个情况。

使用环境变量定义api版本

export ETCDCTL_API=3

etcd有目录结构类似linux文件系统,获取所有key看一看:

etcdctl get / --prefix --keys-only

设置kubeconfig文件

kubectl命令的kubeconfig文件默认位置为.kube/config文件,如果想调整为其它目录,则设置以下环境变量

export KUBECONFIG=/etc/kubernetes/kubelet.conf

kubectl get nodes

Kubernetes: Killing container with id docker://xxxx: Need to kill Pod问题

当前Kubernetes版本 v1.9.7,当delete pod失败时,使用kubectl describe后发现这个pod有以下events信息:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Killing 16s (x19871 over 26d) kubelet, cn-hangzhou.i-bp1azsintzya8q0ykjsk Killing container with id docker://log-collector:Need to kill Pod

这个问题是Kubernetes偶发的BUG,使用以下命令强制删除:

kubectl delete pod xxxxxx --grace-period=0 --force

执行时会提示风险:

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

问题:root container /kubepods doesn't exist

通过命令kubectl desecribe node k8s-node1查看node时,报错root container /kubepods doesn't exist:

解决方案:

kubelet的配置文件中,添加 --cgroups-per-qos=false --enforce-node-allocatable=""

具体操作:

vi /etc/kubernetes/kubelet

KUBELET_ARGS="--api-servers=http://10.254.0.53:8080 --hostname-override=k8s-node1 --logtostderr=false --log-dir=/var/log/kubernetes --v=2 --cgroups-per-qos=false --enforce-node-allocatable=''"

生成deployment

- kubectl create -f nginx-deployment.yaml

报错No API token found for service account "default"

kubectl describe rs nginx-deployment-5487769f48

错误:Error creating: No API token found for service account "default", retry after the token is automatically created and added to the service account

解决方案:

vi /etc/kubernetes/apiserver

去掉ServiceAccount

不建议如此处理,建议为kubelet配置kubeconfig

server misbehaving

错误:Error from server: error dialing backend: dial tcp: lookup k8s-node1 on 10.0.6.5:53: server misbehaving

vi hosts

添加node节点ip 及 k8s node name映射

no route to host

错误:Error from server: error dialing backend: dial tcp 10.254.0.54:10250: getsockopt:

关闭node节点防火墙

systemctl stop firewalld

systemctl disable firewalld

无可用nodes

- 通过kubectl get nodes无可用的节点

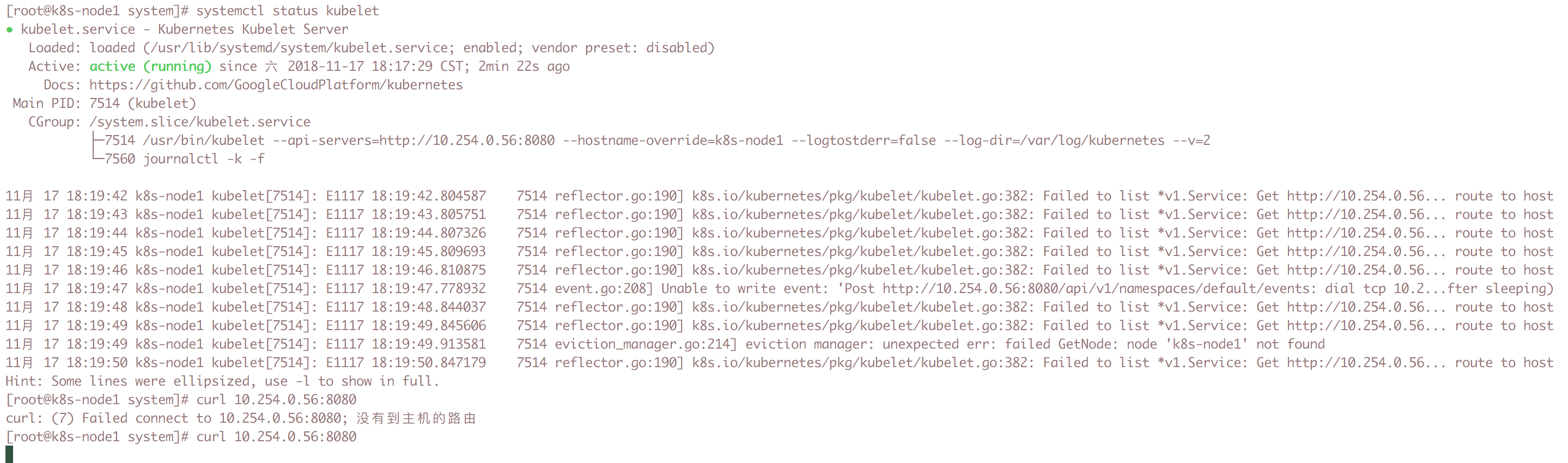

- 通过systemctl status kubelet查看node上kubelet启动日志

- 发现node上无法curl通master节点的8080端口

- 关闭master上的防火墙

systemctl stop firewalld.service - node正常访问master

如果安装flannel后无可用的nodes 重启kubelet

pod访问k8s api 报错:x509: certificate has expired or is not yet valid

E0124 03:12:43.194967 1 reflector.go:199] k8s.io/dns/vendor/k8s.io/client-go/tools/cache/reflector.go:94: Failed to list *v1.Endpoints: Get https://169.169.0.1:443/api/v1/endpoints?resourceVersion=0: x509: certificate has expired or is not yet valid

I0124 03:12:43.561626 1 dns.go:174] DNS server not ready, retry in 500 milliseconds

原因为 各节点时间不一致

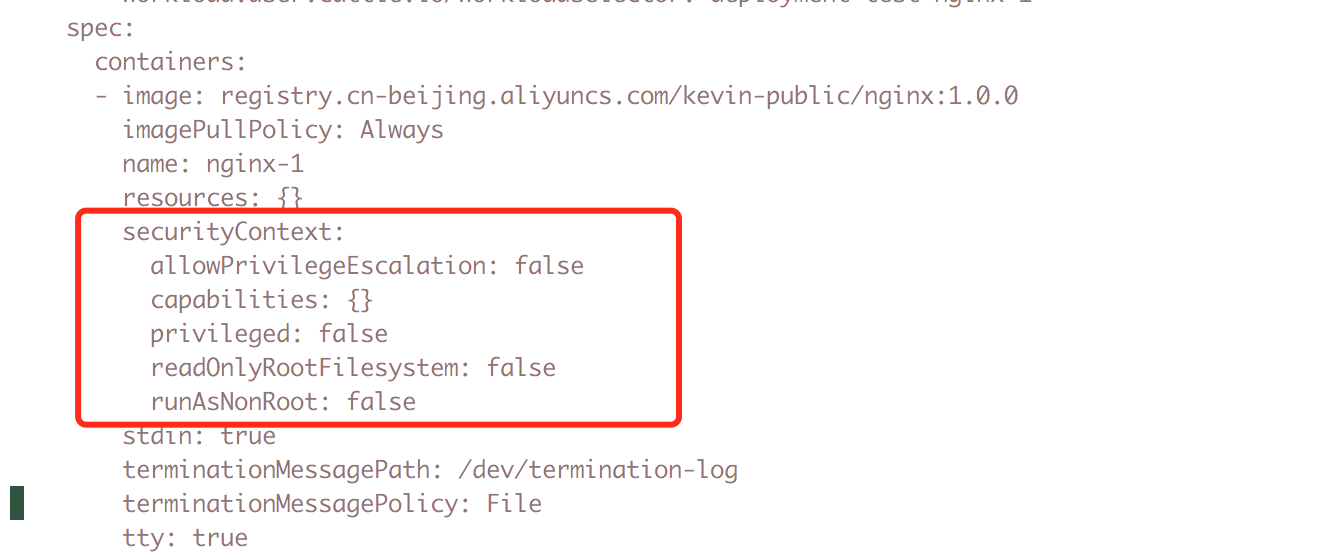

rancher部署容器之container has runAsNonRoot and image will run as root

通过rancher部署容器时,容器启动失败,提示container has runAsNonRoot and image will run as root

通过命令kubectl edit deploy -n test nginx发现deployment设置了securityContext

去掉securityContext配置后,服务正常启动

所以rancher启动容器时,会设置一些安全策略,如果容器以root用户启动,则会失败

pod无法创建成功报错OCI runtime create failed

当describe pod 时提示如下

Warning FailedCreatePodSandBox 3m (x12 over 4m) kubelet, 10.6.1.30 Failed create pod sandbox: rpc error: code = Unknown desc = failed to start sandbox container for pod "service-im-search-68948b4bcd-hnwrw": Error response from daemon: OCI runtime create failed: container_linux.go:345: starting container process caused "process_linux.go:297: getting the final child's pid from pipe caused \"read init-p: connection reset by peer\"": unknown

问题为limit memory填入错误 应该为200Mi写入的为200m cpu的单位为m

node 启动报错failed to get fs info for "runtime": unable to find data for container /

重启节点