目录隔离

创建目录

在工作目录下创建/usr,并将本机该目录下的所有文件copy到工作目录中

启动程序

启动单独mount namespace的程序

unshare -m bash

如果通过unshare命令后,挂载目录后,在其它的mount namespace中仍然可以通过 mount 命令查到,则下载最新的unshare可执行文件

挂载目录

当通过docker run -v挂载目录时,将某个容器外的目录挂载至容器内

mount --bind /data1 data

发现在该mount namespace中,mount命令执行结果如下

[root@k8s-master mount]# mount |grep data

/dev/mapper/centos-root on /root/docker/data type xfs (rw,relatime,attr2,inode64,noquota)

在其它的的mount namespace中则不会出现上述的挂载

chroots

执行chroot chroot . usr/bin/bash时报错

[root@k8s-master docker]# chroot . usr/bin/bash

chroot: failed to run command ‘usr/bin/bash’: No such file or directory

是因为/bin/bash需要调用相关的库,因新环境不存在相关的库文件,因此bash不能运行,从而报上述错误,解决方法是利用ldd /bin/bash查找到其所需要的运行库

[root@k8s-master docker]# ldd /bin/bash

linux-vdso.so.1 => (0x00007ffe109b8000)

libtinfo.so.5 => /lib64/libtinfo.so.5 (0x00007efd3a4e7000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007efd3a2e3000)

libc.so.6 => /lib64/libc.so.6 (0x00007efd39f22000)

/lib64/ld-linux-x86-64.so.2 (0x00007efd3a711000)

将本机的lib64目录复制到工作目录中

cp -r /lib64 .

再次执行chroot . usr/bin/bash,执行成功

bash-4.2# pwd

/

bash-4.2# ls

data lib64 usr

bash-4.2# ifconfig

Warning: cannot open /proc/net/dev (没有那个文件或目录). Limited output.

Warning: cannot open /proc/net/dev (没有那个文件或目录). Limited output.

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.254.0.139 netmask 255.255.255.0 broadcast 10.254.0.255

ether 08:00:27:49:24:98 txqueuelen 1000 (Ethernet)

Warning: cannot open /proc/net/dev (没有那个文件或目录). Limited output.

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

PID隔离

通过pid网络隔离

unshare -pm bash

报错-bash: fork: 无法分配内存

- -f, --fork

Fork the specified program as a child process of unshare rather than running it directly. This is useful when creating a new pid namespace

unshare -fpm --mount-proc bash

测试

[root@k8s-master ~]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 14:29 pts/0 00:00:00 bash

root 13 1 0 14:29 pts/0 00:00:00 ps -ef

网络隔离

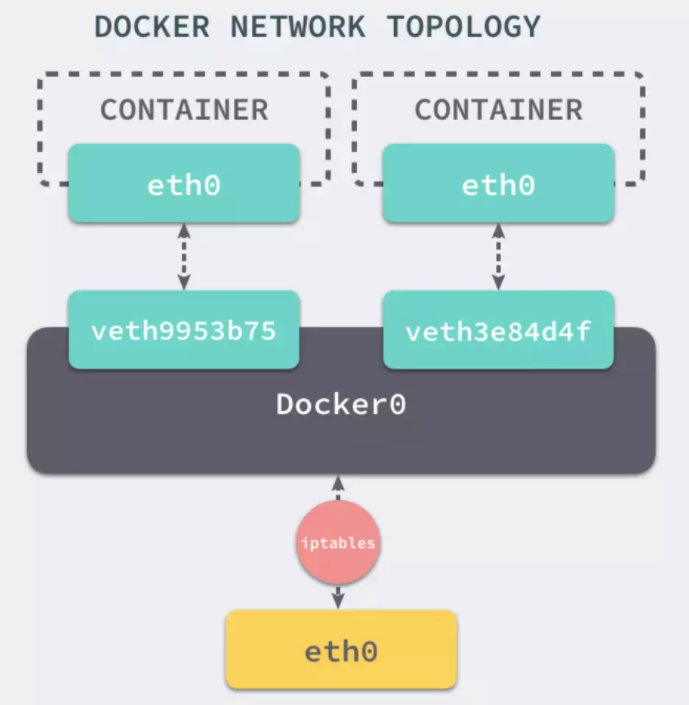

容器网络实现

- Network Namespace: 用于隔离容器和宿主机之间地网络;

- Veth 设备对: 用于连接宿主机和容器, 每个容器都会有一对 Veth 设备, 一个在容器内, 一个在宿主机内;

- 网桥: 通过网桥可以很方便地管理宿主机上的多个 veth 设备, 同时实现不同容器之间地互联;

- Iptables/NetFilter: SNAT 以实现容器内对外网的访问; 实现容器地端口映射等;

- 路由

namespace

[root@k8s-master ~]# ip netns add ns1

[root@k8s-master ~]# ls /var/run/netns

ns1

veth pair

- 在宿主机中创建 veth 设备对

[root@k8s-master ~]# ip link add veth0 type veth peer name veth1

[root@k8s-master ~]# ip addr |grep veth

17: veth1@veth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

18: veth0@veth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

- 把 veth1 移动到 ns1 namespace 里

[root@k8s-master ~]# ip link set veth1 netns ns1

[root@k8s-master ~]# ip addr |grep veth

18: veth0@if17: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN qlen 1000

现在我们在宿主机中就看不到 veth1 了

可以通过 sudo ip del link veth0 来删除这个设备对.

- 启动虚拟网卡

ip link set dev veth0 up

在宿主机上创建网桥

- 安装brctl工具

yum install bridge-utils.x86_64

- 创建网桥

brctl addbr docker0

- 将veth0加入到网桥

brctl addif docker0 veth0

- 为网桥添加ip

ifconfig docker0 172.17.0.1

- 查看网卡信息

[root@k8s-master ~]# ip addr

18: veth0@if17: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue master docker0 state LOWERLAYERDOWN qlen 1000

link/ether 9a:b7:00:19:94:12 brd ff:ff:ff:ff:ff:ff

19: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 9a:b7:00:19:94:12 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::6c5c:88ff:fe54:7a0d/64 scope link

valid_lft forever preferred_lft forever

unshare

由于net namespace是通过ip netns实现的,所以unshare中不需要指定参数-n

- 进入net ns

ip netns exec ns1 bash

- 执行unshare

unshare -fpm --mount-proc bash

在容器中配置ip

- 给veth1设置ip地址

ifconfig veth1 172.17.0.2

- 查看网卡信息

[root@k8s-master ~]# ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

17: veth1@if18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 8a:6b:9e:51:11:42 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::886b:9eff:fe51:1142/64 scope link

valid_lft forever preferred_lft forever

- ping 网桥

[root@k8s-master ~]# ping 172.17.0.1

PING 172.17.0.1 (172.17.0.1) 56(84) bytes of data.

64 bytes from 172.17.0.1: icmp_seq=1 ttl=64 time=0.046 ms

64 bytes from 172.17.0.1: icmp_seq=2 ttl=64 time=0.055 ms

- ping 百度ip

[root@k8s-master ~]# ping 61.135.185.32

connect: 网络不可达

给veth1添加外网访问

- 容器添加网关

route add default gw 172.17.0.1

- 开启 linux kernel ip forwarding

echo 1 > /proc/sys/net/ipv4/ip_forward

-

如果请求报文源自172.17.0.0,并且目的地址非docker0,则进行snat

iptables -t nat -A POSTROUTING -s 172.17.0.0/16 -o enp0s3 -j MASQUERADE or iptables -t nat -A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE- MASQUERADE 不需要 --to-source 因為它是使用動態分配的IP

- SNAT 仅適用於靜態IP,這就是為什麼它有 --to-source

- MASQUERADE 有額外的開銷,比 SNAT慢 因為每次都是 MASQUERADE 目標被資料包击中,它必须檢查要使用的IP地址。

-

如果未开启防火墙,通过上面的操作已可访问外网,如果开启了防火墙,可能需进行下面的操作

- 接受源网卡为docker0目的网卡非docker0的报文

iptables -t filter -A FORWARD -i docker0 ! -o docker0 -j ACCEPT- 接受源网卡及目的网卡均为docker0的报文

iptables -t filter -A FORWARD -i docker0 -o docker0 -j ACCEPT- 如果报文是已建立的连接或相关封包则予以通过

iptables -t filter -A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

删除iptables命令

iptables -t nat -D OUTPUT 1

- ping baidu.com

[root@k8s-master ~]# ping 220.181.38.148

PING 220.181.38.148 (220.181.38.148) 56(84) bytes of data.

64 bytes from 220.181.38.148: icmp_seq=1 ttl=44 time=8.19 ms

64 bytes from 220.181.38.148: icmp_seq=2 ttl=44 time=8.90 ms

- 如果网络不通可安装traceroute

yum install -y traceroute

端口映射

- 在 ns1 内部通过 node 启动一个简单地 http server

[root@localhost home]# node app.js

Server started responding on port 3000.

- 在宿主机上我们可以通过 veth1 的 ip 地址访问这个服务:

[root@localhost home]# curl -I 172.8.0.8:3000/news/all

HTTP/1.1 200 OK

X-Powered-By: Express

Access-Control-Allow-Origin: *

Access-Control-Allow-Headers: *

Access-Control-Allow-Methods: *

Content-Type: application/json; charset=utf-8

Content-Length: 46

ETag: W/"2e-lldIrdSb4AORaEEyD6KoN+mIA9Q"

Date: Mon, 29 Apr 2019 09:37:50 GMT

Connection: keep-alive

- 从别的机器直接访问宿主机映射后的端口

[ec2-user@ip-10-24-254-11 ~]$ sudo iptables -t nat -A PREROUTING -p tcp -m tcp --dport 3033 -j DNAT --to-destination 172.8.0.8:3000

[ec2-user@ip-10-24-254-11 ~]$ sudo iptables -t filter -A FORWARD -p tcp -m tcp --dport 3000 -j ACCEPT

$ curl -I 192.168.102.253:3033/news/all

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 46 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0HTTP/1.1 200 OK

X-Powered-By: Express

Access-Control-Allow-Origin: *

Access-Control-Allow-Headers: *

Access-Control-Allow-Methods: *

Content-Type: application/json; charset=utf-8

Content-Length: 46

ETag: W/"2e-lldIrdSb4AORaEEyD6KoN+mIA9Q"

Date: Mon, 29 Apr 2019 09:40:50 GMT

Connection: keep-alive

chroot

chroot . usr/bin/bash

uts隔离

unshare -fpmu --mount-proc bash

ipc隔离

unshare -fpmui --mount-proc bash

进入容器

nsenter -t 16014 -m -u -i -n -p -r usr/bin/bash

16014为进程号

CGroup

cpu隔离

- 在

/sys/fs/cgroup/cpu目录创建目录docker,docker目录下会自动存在以下文件

[root@k8s-master docker]# ls

cgroup.clone_children cgroup.procs cpuacct.stat cpuacct.usage cpuacct.usage_all cpuacct.usage_percpu cpuacct.usage_percpu_sys cpuacct.usage_percpu_user cpuacct.usage_sys cpuacct.usage_user cpu.cfs_period_us cpu.cfs_quota_us cpu.rt_period_us cpu.rt_runtime_us cpu.shares cpu.stat notify_on_release tasks

- 设置docker group的cpu利用的限制

[root@k8s-master docker]# cat cpu.cfs_quota_us

-1

[root@k8s-master docker]# echo 20000 > cpu.cfs_quota_us

- 将欲限制的进程加到这个cgroup中

echo 18859 > tasks

- top时发现cpu的使用率已降为20%

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

18859 root 20 0 113124 2692 2544 R 20.0 0.3 8:41.85 deadloop.sh

内存隔离

- 在

/sys/fs/cgroup/memory目录创建目录docker,docker目录下会自动存在以下文件

[root@k8s-master docker]# ls

cgroup.clone_children memory.failcnt memory.kmem.limit_in_bytes memory.kmem.tcp.failcnt memory.kmem.tcp.usage_in_bytes memory.max_usage_in_bytes memory.memsw.max_usage_in_bytes memory.numa_stat memory.soft_limit_in_bytes memory.usage_in_bytes tasks

cgroup.event_control memory.force_empty memory.kmem.max_usage_in_bytes memory.kmem.tcp.limit_in_bytes memory.kmem.usage_in_bytes memory.memsw.failcnt memory.memsw.usage_in_bytes memory.oom_control memory.stat memory.use_hierarchy

cgroup.procs memory.kmem.failcnt memory.kmem.slabinfo memory.kmem.tcp.max_usage_in_bytes memory.limit_in_bytes memory.memsw.limit_in_bytes memory.move_charge_at_immigrate memory.pressure_level memory.swappiness notify_on_release

- 设置内存限制

echo 64k > /sys/fs/cgroup/memory/docker/memory.limit_in_bytes

- 将进程添加到该组中

echo [pid] > /sys/fs/cgroup/memory/docker/tasks

总结

宿主机网络操作

- 启动net namespace

ip netns add ns1 - 创建veth pair

ip link add veth0 type veth peer name veth1 - 把 veth1 移动到 ns1 namespace 里-

ip link set veth1 netns ns1 - 创建网桥

brctl addbr docker0 - 将veth0加入到网桥

brctl addif docker0 veth0 - 为网桥添加ip

ifconfig docker0 172.17.0.1

启动容器

- 进入net ns

ip netns exec ns1 bash - 执行unshare

unshare -fpmui --mount-proc bash

容器内网络操作

- 容器中给veth1设置ip地址

ifconfig veth1 172.17.0.2 - 容器中添加网关

route add default gw 172.17.0.1 - 宿主机snat

iptables -t nat -A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

端口映射

- 宿主机snat

iptables -t nat -A PREROUTING -p tcp -m tcp --dport 3033 -j DNAT --to-destination 172.8.0.8:3000

chroot

chroot . usr/bin/bash

bash-4.2# ps -ef

Error, do this: mount -t proc proc /proc

- 在执行chroot前mount /proc目录

mount --bind /proc proc

- 然后执行ps

bash-4.2# ps -ef

UID PID PPID C STIME TTY TIME CMD

0 1 0 0 16:29 ? 00:00:00 bash

0 30 1 0 16:32 ? 00:00:00 usr/bin/bash

0 31 30 0 16:32 ? 00:00:00 ps -ef

- 如果chroot后ping 外网ip正常,外网域名不通时,将宿主机的

/etc/resolv.conf复制到容器中

打开容器2

- [宿]-

ip netns add ns2 - [宿]-

ip link add veth2 type veth peer name veth3 - [宿]-

ip link set veth3 netns ns2 - [宿]-

brctl addif docker0 veth2 - [宿]-

ip netns exec ns2 bash - [容]-

unshare -fpmui --mount-proc bash - [容]-

ifconfig veth3 172.17.0.3 - [容]-

route add default gw 172.17.0.1 - [容]-

mount --bind /proc proc - [容]-

chroot . usr/bin/bash

#!/bin/bash

index=$(($1 + 1))

ns="ns"$index

ip="172.17.0."$index

veth1=$ns"-1"

veth2=$ns"-2"

echo "ip netns add $ns"

echo "ip link add $veth1 type veth peer name $veth2"

echo "ip link set $veth2 netns $ns"

echo "brctl addif docker0 $veth1"

echo "ip netns exec $ns bash"

echo "unshare -fpmui --mount-proc bash"

echo "ifconfig $veth2 $ip"

echo "route add default gw 172.17.0.1"

echo "mount --bind /proc proc"

echo "chroot . usr/bin/bash"