背景

knative对k8s版本要求较高,另k8s不同版本之前apiversion比较乱,如crd在之前的版本中version为apiextensions.k8s.io/v1beta1,在k8s1.22版本后为apiextensions.k8s.io/v1,同时为了学习了解最新的k8s功能,故将k8s版本升级为1.24,

本文采用kubeadm的方式安装

节点准备

- 准备三个节点

- k8s-master 10.0.2.4

- k8s-node1 10.0.2.5

- k8s-node2 10.0.2.6

- 节点配置要求2g 2c 20g

- 修改节点hostname

- master可以ssh 免密登陆node

- 配置节点hosts

linux环境准备(master&node)

关闭 selinux

vi /etc/selinux/config

SELINUX=disabled

关闭防火墙

systemctl status firewalld

systemctl stop firewalld

systemctl disable firewalld

firewall-cmd --state

配置时间同步状态(联网方式,该步骤可以省略)

- 使用 crontab -e命令,在新界面按a见进入编辑模式,输入下面定时任务

0 */1 * * * ntpdate time1.aliyun.com

- 保存退出,使用 crontab -l 查看定时任务状态

升级内核(该步骤可以省略)

- 导入elrepo gpg key

rpm -import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

- 安装kernel-ml版本,ml为长期稳定版本,lt为长期维护版本

yum --enablerepo="elrepo-kernel" -y install kernel-ml.x86_64

- 设置grub2默认引导为0

grub2-set-default 0

- 重新生成grub2引导文件

grub2-mkconfig -o /boot/grub2/grub.cfg

- 更新后,需要重启,使升级的内核生效

reboot

- 重启后执行 uname -ar 查看kernel 版本

加载br_netfilter模块

modprobe br_netfilter

添加网桥过滤及内核转发配置文件(该步骤可以省略)

- 创建/etc/sysctl.d/k8s.conf文件

cat << EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

- 执行命令,刷新配置

sysctl -p /etc/sysctl.d/k8s.conf

- 查看是否加载

[root@k8s-node2 ~]# lsmod | grep br_netfilter

br_netfilter 28672 0

安装ipset/ipvsadm

- 安装ipset及ipvsadm

yum install -y ipset ipvsadm

- 配置ipvsadm模块加载方式,添加需要加载的模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

- 授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

关闭SWAP分区

vi /etc/fstab- 注释swap

# /dev/mapper/centos-swap swap - 重启节点

docker环境(master&node)

安装docker-ce

- 查看是否已安装docker及版本

[root@k8s-master ~]# yum list installed | grep docker

docker.x86_64 2:1.13.1-208.git7d71120.el7_9 @extras

docker-client.x86_64 2:1.13.1-208.git7d71120.el7_9 @extras

docker-common.x86_64 2:1.13.1-208.git7d71120.el7_9 @extras

- 如果版本不对则通过yum remove移除相关程序

yum remove -y docker.x86_64

yum remove -y docker-client.x86_64

yum remove -y docker-common.x86_64

- 配置阿里云镜像站

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

- Docker安装

yum install docker-ce -y

or

yum install -y docker-ce-20.10.17-3.el7.x86_64

- 启动docker进程

systemctl enable --now docker

- 修改cgroup管理方式

创建文件/etc/docker/daemon.json内容如下

{

"exec-opts":["native.cgroupdriver=systemd"]

}

cri-dockerd 安装

-

golang环境准备

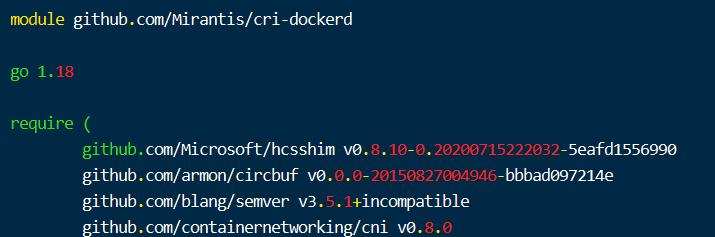

- 获取golang安装包,由于接下来的cri-dockerd模块依赖是golang的特定版本,因此这里下载golang1.18,查看项目依赖go版本的方法是查看go.mod文件说明:

- 下载golang 1.18 版本

wget https://golang.google.cn/dl/go1.18.3.linux-amd64.tar.gz- 解压golang至指定目录

tar -C /usr/local/ -zxvf ./go1.18.3.linux-amd64.tar.gz- 创建gopath目录

mkdir /home/gopath- 添加环境变量,编辑/etc/profile 文件,在文件末尾添加以下配置

export GOROOT=/usr/local/go export GOPATH=/home/gopath export PATH=$PATH:$GOROOT/bin:$GOPATH/bin- 加载/etc/profile文件

source /etc/profile- 配置go proxy代理

go env -w GOPROXY="https://goproxy.io,direct"- 验证golang是否安装完成,执行 go version命令

[root@k8s-node1 ~]# go version go version go1.18.3 linux/amd64 -

部署cri-dockerd

- 下载cri-dockerd源码

git clone https://github.com/Mirantis/cri-dockerd.git- 进入cri-dockerd目录

cd cri-dockerd/- 看cri-dockerd github项目主页 Build and install部分的介绍,介绍的步骤不一定要全部执行,图片下方为需要执行的简单步骤

- 执行 依赖包下载和命令构建

go get && go build- 构建完成后生成cri-dockerd命令

[root@k8s-node2 cri-dockerd]# ls backend containermanager go.mod LICENSE metrics README.md utils cmd core go.sum main.go network store VERSION config cri-dockerd libdocker Makefile packaging streaming- 接下来执行cri-dockerd命令的安装及环境配置命令

install -o root -g root -m 0755 cri-dockerd /usr/bin/cri-dockerd cp -a packaging/systemd/* /etc/systemd/system systemctl daemon-reload systemctl enable cri-docker.service systemctl enable --now cri-docker.socket

安装kubeadm(master&node)

配置k8s镜像源

这里使用国内的阿里镜像源,安装部署k8s

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装 kubeadm kubelet kubectl

- 默认安装仓库中的最新版

yum install kubeadm kubelet kubectl -y

- 也可执行

yum list按照输出的列表项安装特定版本

[root@k8s-master cri-dockerd]# yum list kubeadm kubelet kubectl --showduplicates | grep '1.24.3'

kubeadm.x86_64 1.24.3-0 kubernetes

kubectl.x86_64 1.24.3-0 kubernetes

kubelet.x86_64 1.24.3-0 kubernetes

- 比如安装1.24.3版本

yum install kubeadm-1.24.3-0.x86_64 kubelet-1.24.3-0.x86_64 kubectl-1.24.3-0.x86_64 -y

拉取k8s指定版本的镜像

kubeadm config images pull --cri-socket unix:///var/run/cri-dockerd.sock --image-repository registry.aliyuncs.com/google_containers

执行结果

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.3

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.24.3

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.7

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

如果kubeadm config images pull下载失败,可通过手动pull&tag的方式处理上述镜像,tag为以下镜像

k8s.gcr.io/kube-apiserver:v1.24.3

k8s.gcr.io/kube-controller-manager:v1.24.3

k8s.gcr.io/kube-scheduler:v1.24.3

k8s.gcr.io/kube-proxy:v1.24.3

k8s.gcr.io/pause:3.7

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/coredns/coredns:v1.8.6

拉取镜像k8s.gcr.io/pause:3.6

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6

k8s环境部署

init(master节点)

kubeadm init --kubernetes-version=v1.24.3 --pod-network-cidr=10.224.0.0/16 --apiserver-advertise-address=10.0.2.4 --cri-socket unix:///var/run/cri-dockerd.sock --image-repository registry.aliyuncs.com/google_containers

部署结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.2.4:6443 --token e4whsk.zja9a4isn37h74jh \

--discovery-token-ca-cert-hash sha256:576628b3198e8807d50327d71b72b61a367f6c4e39325773f047c190ea75e2b4

如果init过程中出错了,可通过reset命令清除kubeadm 安装历史信息

kubeadm reset --cri-socket unix:///var/run/cri-dockerd.sock然后再次执行init命令

kube-config

- master节点

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

- node节点

将master节点的$HOME/.kube/configscp到node节点$HOME/.kube/config

join(node节点)

- join

kubeadm join 10.0.2.4:6443 --token e4whsk.zja9a4isn37h74jh \

--discovery-token-ca-cert-hash sha256:576628b3198e8807d50327d71b72b61a367f6c4e39325773f047c190ea75e2b4 --cri-socket unix:///var/run/cri-dockerd.sock

token & ca-cert-hash在init的输出中有,直接copy过来使用 或 参考kubeadm join

检查

准备

- 创建busybox deploy

kubectl apply -f busybox.yaml - 创建nginx deploy

kubectl apply -f nginx.yaml - 创建nginx service

kubectl expose deploy nginx

相同节点pod访问

- 网络不通,原因是未安装flannel

- flannel安装yaml见附件

- 修改yaml文件内容器

"Network": "10.244.0.0/16", ip为master init时pod-network-cidr指定的值 - 如果镜像下载不下来可通过aliyun pull image

- 参考

不同节点pod是否可访问

正常

serviceip是否可访问

正常

servicename是否可访问

正常

问题

无法重启master

kubeadm提供了快速搭建单机Master节点的方法,但是无法做到高可用。当重启master节点时,该节点上的apiserver等相关容器均会销毁,再启动后,master不可用,只能再重启安装

kubeadm reset

kubeadm init

yum 报错 UnicodeDecodeError

- 新建文件sitecustomize.py

vi /usr/lib/python2.6/site-packages/sitecustomize.py

内容:

import sys

sys.setdefaultencoding('UTF-8')

- 注意python版本看服务器具体是2.6 or 2.7,改成对应版本

yum install 报错Gpg Keys not imported

调整kubernetes.repo文件中的gpgcheck和repo_gpgcheck值为0

no matches for kind "Deployment" in version "extensions/v1"

- 通过命令

kubectl api-versions查看支持的apiversion,发现该版本支持的apiversion中无extensions/v1 - 通过命令

kubectl api-resources|grep deployment查看apiresource,发现deploy对应的version为apps/v1

[root@k8s-master deployment]# kubectl api-resources|grep deployment

deployments deploy apps/v1 true Deployment

pause镜像总是被莫名删除

原因是磁盘空间不足,扩容

附

busybox deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

labels:

app: busybox

spec:

replicas: 1 #副本数量

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

containers:

- name: busybox

image: registry.cn-beijing.aliyuncs.com/kevin-public/busybox:1.0.0

command: [ "top" ]

ports:

- containerPort: 80

name: http

protocol: TCP

resources:

requests:

cpu: 0.05

memory: 16Mi

limits:

cpu: 0.1

memory: 32Mi

nginx deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

namespace: default

spec:

replicas: 1 #副本数量

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/kevin-public/nginx:1.0.0

ports:

- containerPort: 80

name: http

protocol: TCP

resources:

requests:

cpu: 0.05

memory: 16Mi

limits:

cpu: 0.1

memory: 32Mi

flannel

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

查看启动命令

如果要二进制安装k8s,但是不清楚启动命令,可通过该方式启动后,进入容器,查看启动命令

apiserver

[root@k8s-master Traffic-Management-Basics]# kubectl -n kube-system describe pod kube-apiserver-k8s-master

Command:

kube-apiserver

--advertise-address=10.0.2.4

--allow-privileged=true

--authorization-mode=Node,RBAC

--client-ca-file=/etc/kubernetes/pki/ca.crt

--enable-admission-plugins=NodeRestriction

--enable-bootstrap-token-auth=true

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

--etcd-servers=https://127.0.0.1:2379

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

--kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

--requestheader-allowed-names=front-proxy-client

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--requestheader-extra-headers-prefix=X-Remote-Extra-

--requestheader-group-headers=X-Remote-Group

--requestheader-username-headers=X-Remote-User

--secure-port=6443

--service-account-issuer=https://kubernetes.default.svc.cluster.local

--service-account-key-file=/etc/kubernetes/pki/sa.pub

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key

--service-cluster-ip-range=10.96.0.0/12

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key

Mounts:

/etc/kubernetes/pki from k8s-certs (ro)

/etc/pki from etc-pki (ro)

/etc/ssl/certs from ca-certs (ro)

Volumes:

ca-certs:

Type: HostPath (bare host directory volume)

Path: /etc/ssl/certs

HostPathType: DirectoryOrCreate

etc-pki:

Type: HostPath (bare host directory volume)

Path: /etc/pki

HostPathType: DirectoryOrCreate

k8s-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki

HostPathType: DirectoryOrCreate

scheduler

Command:

kube-scheduler

--authentication-kubeconfig=/etc/kubernetes/scheduler.conf

--authorization-kubeconfig=/etc/kubernetes/scheduler.conf

--bind-address=127.0.0.1

--kubeconfig=/etc/kubernetes/scheduler.conf

--leader-elect=true

Environment: <none>

Mounts:

/etc/kubernetes/scheduler.conf from kubeconfig (ro)

Volumes:

kubeconfig:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/scheduler.conf

HostPathType: FileOrCreate

controller-manager

Command:

kube-controller-manager

--allocate-node-cidrs=true

--authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

--authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

--bind-address=127.0.0.1

--client-ca-file=/etc/kubernetes/pki/ca.crt

--cluster-cidr=10.224.0.0/16

--cluster-name=kubernetes

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key

--controllers=*,bootstrapsigner,tokencleaner

--kubeconfig=/etc/kubernetes/controller-manager.conf

--leader-elect=true

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--root-ca-file=/etc/kubernetes/pki/ca.crt

--service-account-private-key-file=/etc/kubernetes/pki/sa.key

--service-cluster-ip-range=10.96.0.0/12

--use-service-account-credentials=true

Mounts:

/etc/kubernetes/controller-manager.conf from kubeconfig (ro)

/etc/kubernetes/pki from k8s-certs (ro)

/etc/pki from etc-pki (ro)

/etc/ssl/certs from ca-certs (ro)

/usr/libexec/kubernetes/kubelet-plugins/volume/exec from flexvolume-dir (rw)

Volumes:

ca-certs:

Type: HostPath (bare host directory volume)

Path: /etc/ssl/certs

HostPathType: DirectoryOrCreate

etc-pki:

Type: HostPath (bare host directory volume)

Path: /etc/pki

HostPathType: DirectoryOrCreate

flexvolume-dir:

Type: HostPath (bare host directory volume)

Path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

HostPathType: DirectoryOrCreate

k8s-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki

HostPathType: DirectoryOrCreate

kubeconfig:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/controller-manager.conf

HostPathType: FileOrCreate

proxy

Command:

/usr/local/bin/kube-proxy

--config=/var/lib/kube-proxy/config.conf

--hostname-override=$(NODE_NAME)

Mounts:

/lib/modules from lib-modules (ro)

/run/xtables.lock from xtables-lock (rw)

/var/lib/kube-proxy from kube-proxy (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-cczwf (ro)

Volumes:

kube-proxy:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-proxy

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

lib-modules:

Type: HostPath (bare host directory volume)

Path: /lib/modules

HostPathType:

kube-api-access-cczwf:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

kubelet

/usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime=remote --container-runtime-endpoint=unix:///var/run/cri-dockerd.sock --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

- /etc/kubernetes/bootstrap-kubelet.conf-无该文件

- kube-config

- /var/lib/kubelet/config.yaml

[root@k8s-node1 ~]# cat /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s